Where there is a cloud, there’s also a high chance of receiving a stinging cold shower of unexpected cloud computing costs.

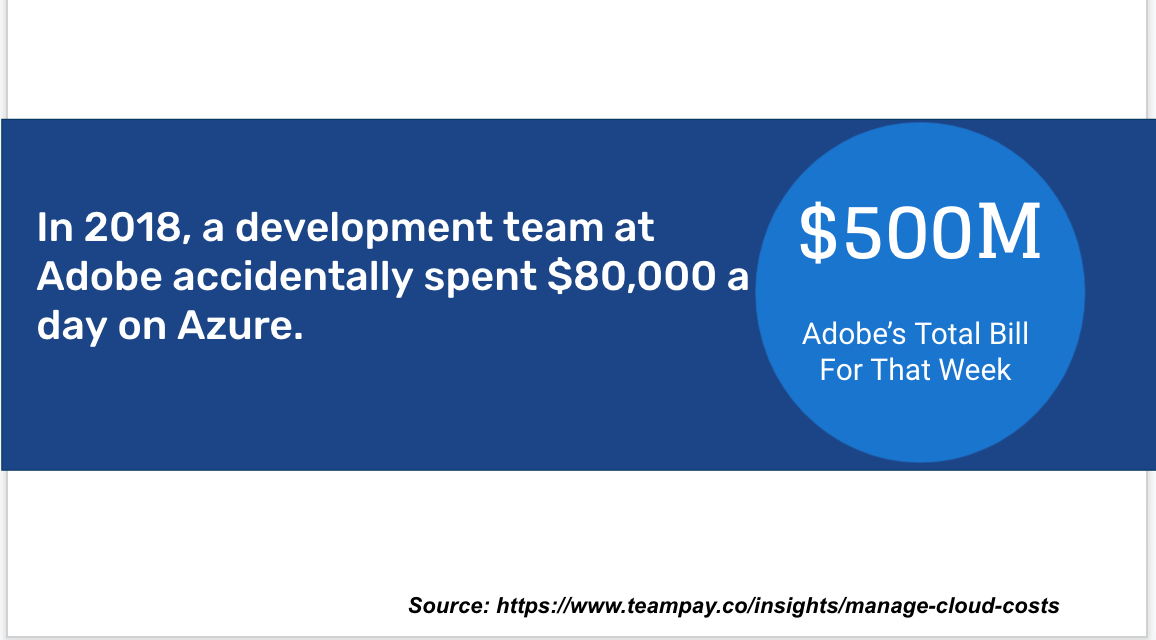

That’s how Adobe executives felt when their development team dropped $80,000 on cloud computing in one day by accident, racking up a weekly bill of $500 million.

Such cloud overspending happens in every company, albeit on a smaller scale. Because public cloud vendors made it simple to provision resources in one click.

Unpredictable cloud computing costs may appear to be a moderate “price” to pay for the ability to stay connected and operational, with resilient cloud computing extended to business. Yet, if left uncontained, ongoing cloud operating costs can offset all the revenue gains received from migration.

Why cloud cost optimization should be ruthless

Exodus to the cloud is real. Last year, enterprise spending on cloud computing increased by 35%, hovering at $130 billion and finally beating data center investments.

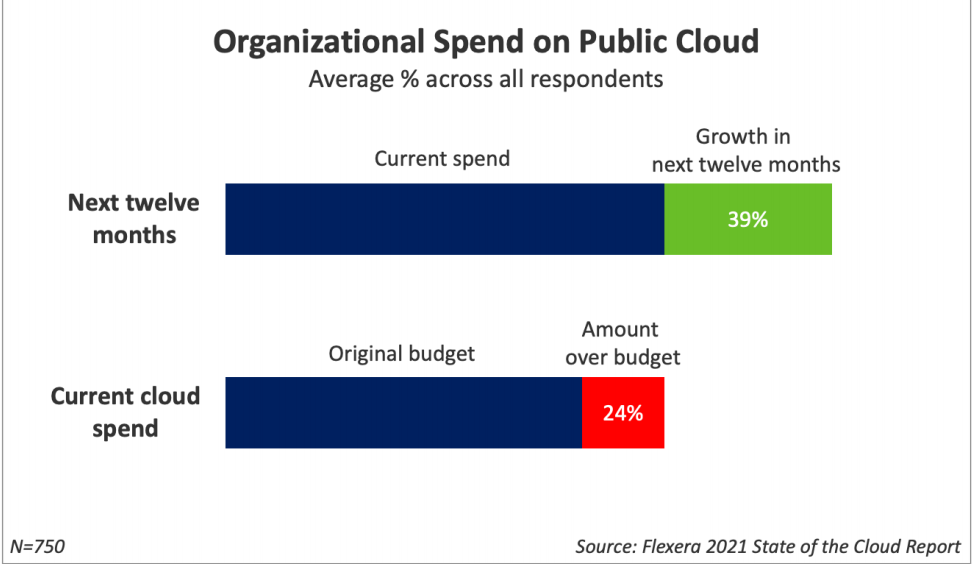

But roughly the same amount — 30% to 35% — of resources go to waste, as per Flexera’s 2021 State of the Cloud Report.

(Overspending on the public cloud is rampant. Over 24% of public cloud computing costs exceed the original budget. Image Source.)

How come public cloud cost containment remains problematic, especially given the fact that every public cloud platform offers tools galore for cloud cost optimization?

After analyzing over 45,000 AWS accounts using CloudFix, our AWS cost optimization software, we found that the biggest issue with cloud resource wastage is rarely a one-off event (though this happens too), but instead is a continuous issue.

As the corporate cloud infrastructure expands, the intricacies of containing cloud costs among provisioned instances, sandbox environments, storage systems, and even multiple clouds become too twisted.

Moreover, cloud providers themselves don’t really help:

- Usage-based systems gloss over the real problem — lack of clarity into how those resources are actually consumed by users, systems, and connected devices across all cloud offerings.

- The AWS, Azure, and Google Cloud ecosystem of cloud services has also grown increasingly complex, to the point where even experienced cloud architects cannot always select the optimal types of configurations.

But the above doesn’t mean that you should be leaving cloud money on the table. On the contrary, you should get proactive with recuperating those costs. We’ll show you how it’s done on AWS.

How to optimize cloud computing costs on AWS

From one cloud overspender to another: our AWS bill was overblown too.

Across 40,000+ AWS accounts, we were wasting thousands of dollars. Of course, we tried all the manual fixes and a bunch of cloud cost optimization tools too.

Most solutions pointed towards resource-hungry applications. Yet none showed how to fix the underlying issues.

Since we have over 4,000 developers, we thought that perhaps instead of asking all of them to look for fixes, we should build a tool to do just that. That’s how CloudFix came up.

CloudFix is an automated AWS cost management tool that identifies and patches leaking cloud spending. Installed in one click, CloudFix runs in the background without any disruptions and saliently reclaims back pointless infrastructure spending. Today, on a $20M annual spend across several AWS accounts, we claw back about $150K in annualized savings every week and drive over 50% in annualized cloud cost saving for our clients.

And here are 10 lessons we learned on our journey of reducing cloud costs.

1. Get engineers to evaluate the costs first

The first lesson is simple: people drive up cloud computing costs.

You have two concurrent groups who’ll influence the costs:

- Execs and finance folks who estimate the total cloud budget for the quarter/year. Their projections are mostly based on past usage and growth plans. If you fail to address the overspending in Q1, it will migrate and magnify during the next planning cycle.

- Engineering teams. The architecture choices your developers make each day will determine the total cloud bill. No fancy AWS cost management tool will help you unless you explain the importance of cloud financial management.

Ideally, the IT and Finances departments should be well-aligned on:

- Estimated cloud computing costs (and the reasoning behind some caps)

- Resource tagging decisions (which resources are for testing and which are for production)

- Consistent costs — spending on predictable, budgeted workloads and reserved instances.

- Variable costs — on-demand and spot instances, auto-scaling costs, serverless computing, etc.

When the two have a mutual understanding of what constitutes a reasonable cloud bill, they don’t fight over subtle deviations but focus on optimizing the big picture of costs.

2. Do an inventory of all cloud resources

Doing a tally of all your AWS resources is the cornerstone to subsequent optimization.

This is a basic but important step. You can’t fix what you cannot see.

You have two goals here:

- Set up automation for shutting down idle requirements when not in use. For example, switching off development and test environments outside of working hours can lead to up to 75% in savings.

- Find and fix over-provisioned resources. Categorize these into buckets such as “no longer needed”, “can be downgraded”, “worth migrating to an alternative service.”

Here are some common types of over-provisioned AWS resources to hunt for:

- Underutilized clusters in Amazon Redshift

- Idle instances in Amazon RDS

- Idle cluster nodes in Amazon ElastiCache

- Cases of suboptimal container resource usage

- Any other “stray” instances or VMs

The above can be daunting and should be automated once you have a chance.

3. Consider migrating to AWS gp3 EBS volumes

Last year, Amazon launched gp3 volumes for Amazon EBS (Elastic Block Store) in lieu of gp2, which most teams use.

The advantage of gp3 is that you can automatically increase IOPS and throughput without provisioning extra black storage capacity. Overall, gp3 can provide predictable 3,000 IOPS baseline performance and 125 MiB/s regardless of volume size, which makes it attractive and cost-effective for high-load applications.

But we also found that it is worth migrating gp2 volumes with less than 3000 IOPS to gp3. By doing so, you can save around 20% yearly as gp3 volumes cost $0.08 / GB compared to $0.10 / GB for gp2.

Read more about migrating from EBS gp1/gp2 to gp3 and estimated cloud cost savings.

4. Watch out for AWS EBS volumes unattached to EC2 instances

Staying on the subject of Amazon EBS: did you know that you are getting billed even when your instances are stopped?

Amazon charges for all EBS volumes attached to EC2 instances, whether they are in use or not. Because, unlike instances, EBS volumes are billed at “gigabyte-months”. Thus, ask your dev people to delete volumes and snapshots they no longer need (after they backed up the data!).

P.S. CloudFix can do that for you automatically.

5. Reduce oversized resources on EC2 instances

Right-sizing resources to instances may seem like an art rather than science when you don’t how much is “too big.”

So first, check the historical data on your instance usage. You can analyze CPU utilization and weed out a set of candidates for de-provisioning.

But don’t go full galore. Instead, reduce instance size progressively. During week one, switch the instance from t3.xlarge to t3.large. Analyze resource consumption and performance. If all is good, go another notch lower during the second week and see what happens.

Also, if you notice that a reduced instance demands extra capacity, don’t rush to upscale it via the standard route.

Instead, check in with AWS Spot — an on-demand instance service upselling spare capacities at a nice discount (up to 90%). Prices are set by Amazon and dynamically adjusted depending on the current demand and supply. Spot instances are well-suited for fault-tolerant workloads such as containerized apps, CI/CD, or big data analytics.

6. Try Amazon S3 intelligent tiering if you run a data lake

Amazon S3 is a popular solution for hosting data lakes.

But the problem is that it’s not the most affordable storage service, so your cloud costs can get steep. Especially if you have a data science team that loves pushing data into the lake and then forgetting about it.

Amazon S3 intelligent tiering service scans your data objects and automatically moves infrequently used assets into a lower-cost storage tier. You can configure the tool to auto-move all objects that were not accessed for 30 days to S3 One Zone Infrequent Access tier.

Then, you can set it to push archival data to S3 Glacier and S3 Glacier Deep Archive, which have 95% lower costs compared to S3 Standard. Good savings in both cases!

7. Alternate between Amazon S3 Infrequent Access and One Zone Infrequent Access

High chances are that you have a ton of non-used data, capable of tolerating lower availability.

Those objects are strong contenders for getting moved to S3 Infrequent Access (IA) or One Zone IA — both provide significantly cheaper storage.

Here are the availability tradeoffs:

- Amazon S3 Standard. Availability: 99.99%. Annual downtime: 52m 36s

- S3 Infrequent Access. Availability: 99.9%. Annual downtime: 8h 45m

- S3 One Zone Infrequent Access. Availability: 99.5%. Annual downtime: 1d, 19h 49m

Moving your data to One Zone IA also means lower durability. Since all your data will be stored in one region (without replication), in the event of major regional failure it will be unavailable or even lost. Thus, it may be not the best option for storing, for instance, compliance records.

Apart from availability, you should also mind data retrieval costs for S3 IA. The standard charge is $0.01 per GB, on top of the standard Data Transfer fee in S3, plus a $0.01 per 1,000 conversions charge from Standard S3 to Infrequent Access.

Finally, the minimum billable object size is 128KB. If you are transferring smaller data objects, you still pay for 128KB of storage.

8. Look for discounts

It’s ok to be a bargain seeker, especially when your cloud bill is giving you shivers.

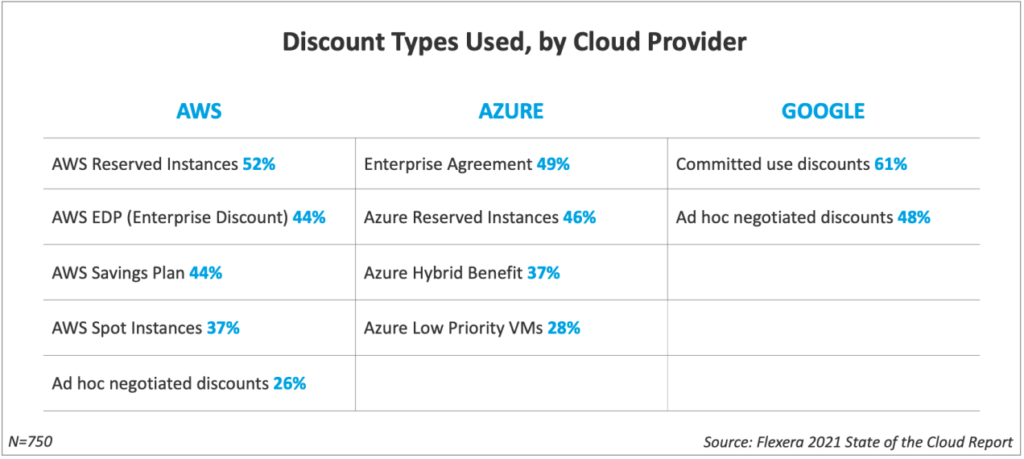

AWS, as well as other public cloud providers, have quite a few attractive saving plans:

(AWS has plenty of discount plans to profit from Image Source)

A word of caution on reserved instances on AWS.

You can choose between Standard and Convertible reserved instances.

- Standard instances are more affordable, but cannot be converted to another instance type. But you can sell them on AWS Marketplace.

- Convertible instances cannot be re-sold but can be upgraded to a more expensive instance type. Note: you cannot downgrade a convertible instance back. So think twice before upgrading!

Bonus tip: Go look for AWS credits.

Here are several ethical ways to get free AWS credits:

- Check if you qualify for AWS Activate

- Attend AWS webinars and events — you’ll learn something, plus can get some free credits doled out

- Apply for AWS Imagine Grant Program

- Publish an Alexa skill and receive $100/mo. while it’s active

- Sign up for Ship program from Product Hunt for $5,000 in credit.

Also, look for other AWS partners. You probably already have one in your current stack, so go and claim your credit!

9. See if you can profit from Amazon EFS One Zone

Amazon Elastic File System (Amazon EFS) is a serverless, flexible file system for storing shared data across EC2 instances, ECS, EKS, AWS Lambda, and AWS Fargate.

Till March 2021, the service automatically determined where they’d store your data (typically across several availability zones). Now, however, you can choose your zone for EFS storage and transfer all the data to it.

Amazon EFS One Zone storage works similarly to One Zone Infrequent Access storage classes in S3:

- Select a low-cost region (e.g. Virginia if you are US-based)

- Enable configure lifecycle management

- Transfer infrequently accessed data

Enjoy a lower cloud storage bill.

10. Do regular housekeeping of AWS RDS

Database storage optimization often gets overlooked. That’s a shame because there’s plenty of fixes and fine-tunes you can do to reduce your AWS RDS costs.

On the surface, RDS instances may appear similar to EC2 instances. That’s true, but there are also several important caveats.

First, unlike EC2, RDS supports only hourly billing. Secondly, the RDS DB instance cost varies depending on the relational database engine you are using.

AWS supports 6 popular DB engines:

- 3 open-source: MySQL, PostgreSQL, MariaDB

- 3 proprietary: Amazon Aurora, Oracle, Microsoft SQL Server.

The licenses for the latter are not included in your RDS billing. So you have to either bring your own license (as in the case with Oracle and Microsoft) or pay a higher hourly bill.

While changing a database engine is not the easiest task, migrating to an open-source solution or Amazon Aurora can pleasantly lower your cloud bill.

The second most important tip for optimizing AWS RDS costs is selecting the right types of instances.

Granted, this is easier to do since you have just 18 RDS database instance types unlike 254 instance types on EC2. Still, you have to stay mindful of your provisioning and usage patterns.

Here are some important caveats:

- RDS reserved instances can save you upfront costs. But the discounts are tied to a specific DB instance family (e.g. db.m5.xlarge). Meaning your discount only applies to instances in the same family, regardless of size. But you can’t apply it towards a different instance family aka there are no Convertible reserved instances.

- DB Instance costs vary by region for both on-demand and reserved instances. Obviously, reserved instances come with bigger cost savings. But some may be surprised to the extent.

Here’s a quick comparison of AWS region costs for on-demand vs reserved db.m5.xlarge instance with MySQL engine across regions:

- On-demand US East 1: $2,996/year

- Reserved US East 1: $1,791/year (40% discount)

- On-demand: SA East 1: $4,047/year

- Reserved SA East 1: $1,825/year (45% discount).

Overall, you can get at a 30%-40% saving by getting a reserved instance in a cheaper AWS region. Thus, consider doing a “spring clean” to see if you could muster more reserved instances.

Finally, you can also consider migrating to Aurora serverless — a new on-demand, auto-scaling version of the Aurora DB engine.

The benefit of Aurora serverless is that it bills you only for provisioned Aurora capacity units (ACUs). An ACU is 2 GB of memory, plus corresponding CPU, and networking resources. So when you decide to stop a database you are not using, you’ll only get charged for storage, but not for workloads. This can be a great option for housing databases with rarely accessed data or those kept at close reach for a postponed analytics project.

To Conclude: Battle Cloud Cost with Automation

The conversation about AWS costs is never an easy one to have, not with the developers nor the finance people.

The first cohort is reluctant to waste precious time on optimizing AWS infrastructure (and then breaking things, instead of fixing them). Plus, no one wants to be the person in charge of “boring” stuff such as resource tagging, monitoring, and optimization policy development. AWS also issues so many updates that you probably need to hire a separate “watchdog” to sift through these every other day.

The finance folks, on the other hand, do not always understand the challenges of estimating the monthly AWS bill when workloads vary and projects scale rapidly. Yet they want to have precise numbers.

Our team was not a fan of the above dynamics. Thus, we have our automated AWS cost optimization platform that does all the scanning for you and then issues the fixes. So the finance people get their numbers, while developers don’t have extra overheads and can stay focused on strategic initiatives.

Learn more about CloudFix.